AMD's Inference Inferno: Justifying a $500 Price Tag

At first glance optimistic, but hard to overlook.

Advanced Micro Devices (AMD) is about to ride the biggest wave in AI yet: inference. While Nvidia’s been stealing the spotlight, AMD has quietly building a powerhouse to dominate the real money-maker, AI’s everyday decision-making engine.

This article breaks down my $295 base case and a $500 bull case for AMD’s stock, fueled by skyrocketing revenue, increasing margins, and billion-dollar bets from tech giants like Microsoft, Meta, and Oracle.

For reference, my more conservative scenario provides a fair value of $180 per share, but this article is about considering the art of the possible.

Contents:

What is AI Inference?

AMDs AI Inference Capabilities

The Vote of Confidence to Back Up The Thesis…

How Might This Play Out?

Valuation Conclusion

AMD stock has been volatile over the last 5 years. Hitting a high of $220 early in 2024, then dropping below $80 little over a year later.

Three years ago, it had a higher stock price than today, and given the backdrop of AI, this company has not skyrocketed in the way you might expect, given Nvidia's meteoric rise.

While AMD has not kept tabs with Nvidia to date, I believe AMDs next rise will be underpinned by its positioning in the world of inference - and the rise could be quite shocking.

What is AI Inference?

AI Training is like going to school - it's the learning phase where an AI system studies millions of examples to understand patterns. For instance, to train a self-driving car, developers would show the model thousands or millions of images of stop signs so it learns what they look like.

AI Inference is like taking the final exam - it's when that trained AI system applies what it learned to make decisions about brand new information it's never seen before. An example of AI inference would be a self-driving car that is capable of recognising a stop sign, even on a road it has never driven on before.

Here's a simple way to think about it:

Training: Showing an AI millions of cat photos and saying "this is a cat"

Inference: The AI looking at a new photo and correctly saying "that's a cat"

Real-World Examples of Inference

You use AI inference constantly without realising it:

When ChatGPT answers your question - it's using inference

When Netflix recommends shows - that's inference

When your phone recognises your voice - inference again

When email filters catch spam - more inference

When online shopping suggests products - still inference

When you type a prompt into ChatGPT, the model is performing inference. It's using its trained knowledge to generate a response in real time, without learning anything new from your specific prompt.

Why Inference Matters More Than Training

While training gets all the headlines (because it's expensive), inference is where the real money is made. Unlike training, inference occurs after a model has been deployed into production. During inference, a model is presented with new data and responds to real-time user queries.

Think of it this way: You only need to train ChatGPT once, but millions of people use it for inference every single day. Whereas training takes place in distinct, intensive phases, inference costs are continuous after deployment.

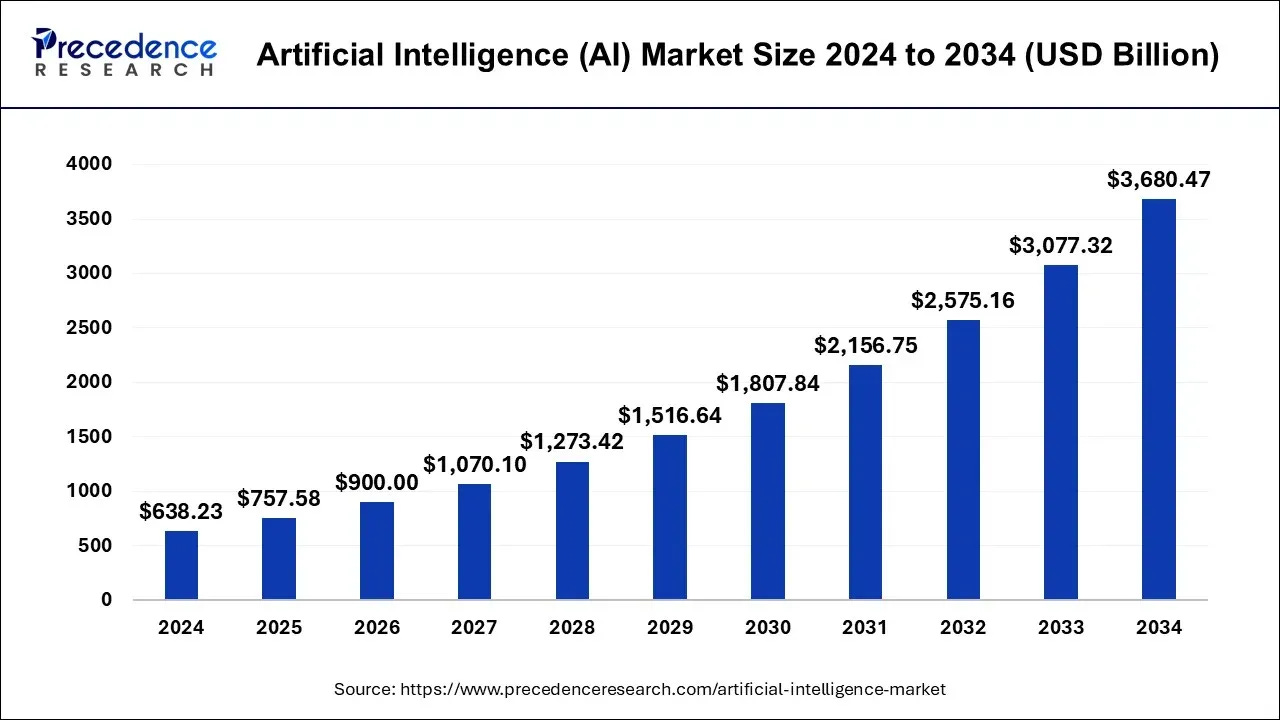

The Massive Market Opportunity

The AI inference market is absolutely enormous and growing fast:

Current Size: The global AI inference market size was estimated at USD 97.24 billion in 2024 and is projected to reach USD 253.75 billion by 2030, growing at a CAGR of 17.5% from 2025 to 2030.

Alternative Projections: Some research shows even higher numbers - The global AI Inference market is expected to grow from USD 106.15 billion in 2025 to USD 254.98 billion by 2030 at a CAGR of 19.2% during the estimated period 2025-2030.

Estimating the true potential size right now is impossible, and much like with the internet and mobiles phones - I believe it’s likely even the optimistic scenarios are underestimating the future.

AMD has quietly been positioning itself to capitalise on this next wave in the AI rollout.

AMD’s gross profit margin has grown sustainably over the last decade (below) - showing no signs of letting up just yet. While it’s net profit margin has stayed pretty steady.

So what’s the story here?

Well, AMD has essentially been spending its capital investing for future gains rather than cashing out for shareholders. This is why the profit margin has not increased, they are investing for future gains.

AMD’s R&D expenses as percentage of revenue last year was 26%, Nvidia’s for comparison was 14%.

An enormous chunk of this spend has been allocated to ensuring AMD can capitalise on the inference wave - when it comes. Well it is now here.

The story then at this point is as follows:

AMD has not had a rapid increase like Nvidia during the AI training wave - many have given up on in this chip maker.

Quietly though behind the scenes, AMD has been preparing for the even bigger AI boom which is only just materialising, and I believe AMD is positioned incredibly well.

AMDs AI Inference Capabilities

AMD's advantage in processing AI tasks, comes from its chips having a lot of memory and being cost-effective.

Their main AI chip for these jobs, the Instinct MI300X, is equipped with a massive 192GB of high-speed memory. This is 2.4 times more than what NVIDIA's H100 offers, which is a big deal because it means large AI models, like Llama2-70B, can run entirely on just one AMD chip. This saves money and makes systems more reliable by avoiding the need to split models across multiple chips.

AMD isn't stopping there. Their next chip, the MI350X, launching in the second half of 2025, will have even more memory (288GB) and is expected to be up to 35 times faster for certain AI tasks than the MI300X. Looking further ahead, the MI400 series, planned for 2026, promises 10 times better overall performance with an even larger memory capacity of up to 432GB.

This abundance of memory translates into real benefits for users. The MI300X can process large language models 40% faster than NVIDIA's H100, leading to quicker responses. It also performs very similarly to the H100 in standard industry tests, often within 2-3% of its performance. Crucially, AMD's solutions offer 3 to 4 times better value for money, with hardware costs ranging from $10,000-$15,000 compared to NVIDIA's equivalent solutions at $30,000-$40,000. Plus, AMD's MI325X chip consistently outperforms NVIDIA's H200, especially when handling many tasks at once, where having a lot of memory is critical.

AMDs Positioning for Inference In a Nutshell

Powerful, Flexible Chips:

AMD’s EPYC processors are like super-smart engines, perfect for smaller AI tasks, such as running AI on devices like smart cameras or cars. Their Instinct MI300X GPUs are built for big jobs, like powering Meta’s Llama AI models. These chips are fast, efficient, and handle the heavy lifting of AI inference with ease.User-Friendly Software:

AMD’s ROCm software is like a toolbox that makes it easy for developers to use AMD’s chips for AI. Recent updates have doubled the MI300X’s performance, catching up to NVIDIA’s software, which has long been the industry standard. This means more companies can switch to AMD without a steep learning curve.Game-Changing FPGAs:

In 2022, AMD bought Xilinx for $35 billion, adding field-programmable gate arrays (FPGAs) to its arsenal. FPGAs are like Swiss Army knives—chips that can be reprogrammed for specific tasks. This is a big deal for AI inference, especially in edge computing (think AI on phones or drones), where they use less power and can be customized on the fly. For example, AMD’s Versal ACAP chips are up to 20 times more energy-efficient than traditional GPUs for certain tasks.Affordable Performance:

AMD’s chips deliver top-notch performance at a lower price than NVIDIA’s. This is a huge draw for businesses running AI on a large scale, like cloud providers or enterprises, who want to save money without sacrificing quality.

The Vote of Confidence to Back Up The Thesis…

While most investors chased NVIDIA's training boom, the world's biggest tech companies quietly placed massive bets on AMD for the next phase.

Microsoft made the first move, launching AMD-powered virtual machines on Azure to run ChatGPT and Microsoft Copilot. When you chat with AI today, there's a good chance it's running on AMD chips serving millions of users simultaneously.

Meta went all-in, choosing AMD's MI300X processors to power their flagship Llama AI model for all live traffic. This isn't a test—it's production deployment where performance directly impacts user experience for billions of people.

Oracle doubled down with the biggest bet yet, announcing plans for AI clusters with up to 131,072 AMD processors. That's roughly $180 million in chips alone, representing one of the largest AI deployments in history.

These aren't tech experiments. These are billion-dollar infrastructure decisions by companies whose business depends on AI performance.

Why the sudden confidence? AMD's chips pack 2.4x more memory than NVIDIA's equivalent—enough to run massive AI models on a single chip instead of splitting them across multiple expensive processors. Plus, they deliver comparable performance at 40% lower cost. When you're spending billions on AI infrastructure, those savings add up fast.

The timing couldn't be better. As AI shifts from expensive training to profitable inference, where the real money gets made, AMD's advantages shine brightest. Training happens once; inference happens millions of times per day, every day.

The hyperscalers have spoken with their wallets. AMD isn't just competing with NVIDIA anymore, it's winning the contracts that matter most.

How Might This Play Out?

AMD’s AI Inference Market Opportunity by 2030

The AI inference market, projected to reach $254 billion by 2030 within the broader AI accelerator market, is a battleground where AMD can gain significant ground. Current market share estimates place NVIDIA at 80-85%, AMD at 5-7%, and others (e.g., Intel, Graphcore) at the remainder.

Base Case: 10% Market Share ($25.4 Billion Revenue)

In my base case, AMD captures 10% of the $254 billion inference market by 2030, generating $25.4 billion in inference revenue.

This aligns with a 28% revenue CAGR, growing total revenue from $31.03 billion in 2025 to $106.6 billion by 2030, with a 15% profit margin and a P/E of 30, yielding a $295 stock price. Key drivers include:

Hyperscaler Adoption: Microsoft’s Azure and Meta’s Llama deployments demonstrate AMD’s chips are production-ready. Oracle’s $180 million order for 131,072 processors signals massive demand, potentially adding $5-7 billion annually to inference revenue by 2030.

MI400 Launch: The MI400 series, with 432GB of memory and 10x performance over MI300X, will compete with NVIDIA’s H200 and Blackwell GPUs, capturing cost-sensitive customers. AMD’s MI325X already outperforms H200 in multi-task inference, boosting confidence.

FPGA Growth: FPGAs from Xilinx, like Versal ACAP, are gaining traction in edge computing (e.g., autonomous vehicles, IoT), where low power and flexibility matter. AWS’s use of AMD FPGAs in F1 instances supports this trend, potentially adding $2-3 billion to inference revenue.

Software Improvements: ROCm’s open-source approach is attracting developers, with updates doubling MI300X performance. This reduces NVIDIA’s CUDA advantage, encouraging more companies to switch.

This scenario assumes steady growth, doubling AMD’s current 5% share. Total data center revenue could reach $30 billion by 2030 (from $12.6 billion in 2024), with inference as a major component, driven by a 40% segment CAGR.

Bull Case: 15% Market Share ($38.1 Billion Revenue)

In my bull case, AMD captures 15% of the $254 billion inference market, generating $38.1 billion in inference revenue.

This supports a 33% revenue CAGR, growing total revenue to $129 billion by 2030, with an 18% profit margin and a P/E of 35, yielding a $500 stock price. Key drivers include:

Aggressive Hyperscaler Wins: Beyond current partners, AMD could secure deals with Google, Amazon, and others, driven by cost savings (AMD’s $10,000-$15,000 chips vs. NVIDIA’s $30,000-$40,000). If AMD powers 20% of new AI clusters by 2030, inference revenue could hit $10-15 billion from hyperscalers alone.

MI400 Dominance: The MI400 series could outperform NVIDIA’s Blackwell GPUs in price and performance, especially for large-scale inference. With 432GB of memory, it’s ideal for massive models, potentially capturing 20% of cloud inference workloads.

FPGA Leadership in Edge: AMD’s FPGAs could dominate edge computing, where energy efficiency is critical. Applications in autonomous vehicles, smart cities, and IoT could add $5-7 billion in inference revenue, as Versal ACAP chips offer 20x better efficiency than other GPUs.

Software Ecosystem Shift: If ROCm becomes a true CUDA alternative, AMD could attract a wave of developers, especially in open-source communities. This could shift 5-7% of NVIDIA’s market share to AMD by 2030, as companies prioritize flexibility and cost.

Acquisitions and Talent: The ZT Systems acquisition and hires from Untether AI and Lamini enhance AMD’s end-to-end AI solutions, potentially adding $3-5 billion in revenue.

This scenario assumes AMD triples its current market share, with data center revenue reaching $40-45 billion by 2030, driven by a 50% segment CAGR. The inference market could exceed $254 billion if generative AI adoption accelerates, further boosting AMD’s potential.

Valuation Conclusion

AMD is positioned to not just keep tabs with it’s current AI market share, but significantly increase upon it.

While initially seeming optimistic, I believe my two scenarios below are more than fathomable.

Base Case 5 year Valuation Model: $295

Revenue - 28% Compound Annual Growth Rate

Profit Margin - 15%

P/E - 30

Base Case 5 year Valuation Model: $500

Revenue - 33% Compound Annual Growth Rate

Profit Margin - 18%

P/E - 35

This analysis represents my personal investment thesis and should not be considered personalised financial advice. Always conduct your own research and consider your risk tolerance before making investment decisions.